User Tests

The self-assessment video feature works on the assumption that if language learners are given the chance to take risks and to even make mistakes, their practice time will become more effective. Therefore, rather than constantly letting learners know if their speech is right or wrong, LanguageBug would prompt them to record a video of themselves after each practice.

Learners would then be able to evaluate their own learning progress and assess their language proficiency. The purpose of conducting user tests was to collect initial feedback on this feature and to generate more design questions.

Primary goal

My peers and instructor made me aware of other hypotheses and some concerns related to the self-assessment video feature, such as:

- Would this kind of video really be motivating to learners?

- Could these videos make learners feel shy and embarrassed?

- Would learners be interested in watching themselves speaking?

The primary goal of the user tests was to collect initial information that could guide me on verifying these hypotheses.

Secondary goals

Conduct user tests would also provide me with general insights on usability, such as:

- evaluate if the mechanics of the application are working well,

- if the features are easy to access and user-friendly,

- understand how users feel while using the app,

- discover additional gaps I would like to address, and

- find out other perceptions and reflections on the app.

Research method

I performed a “Wizard of OZ” simulation of the LanguageBug application. According to the Handbook of Human-Computer Interaction (Helander, 2014), the Wizard of Oz’ method happens when “the interface is simulated with the aid of human confederates” (p. 987). Additionally,

This is particularly useful in cases where a complex task machine would have to be built in order to test an actual implementation of the design” (Helander, 2014, p. 987)

My roles as the “Wizard of Oz” were:

- to walk users through the different simulated app screens,

- to speak Portuguese at all times an audio would be played,

- to give command prompts to the users in an established order,

- to praise effort and encourage learners to keep trying, and

- to record the learners performance after each practice.

During the whole test, I would conduct user observation. I would also refrain myself from:

- providing additional information on the prompts,

- explaining the sentences beyond their translation, and

- making any sort of correction or interference.

Brief interview

After the tests, I conducted a brief one-on-one interview with each participant, in which I asked open questions such as:

- how are you feeling?

- how did you like this experience?

- do you have any feedback or concern you want to share?

- would you try and learn a language in this way?

Post-test questionnaire

A few days after the test session, I sent the self-assessment videos to each of the users with a post-test questionnaire to assess:

- how much learning they believe they have accomplished,

- how they felt when they saw themselves speaking Portuguese,

- how they would evaluate their own performance, and

- if they would expect their performance to be better after a second practice.

Recruitment strategy

Since LanguageBug targets adults learners who do not speak Portuguese, there were many eligible user testers in the DMDL/G4L programs. I selected user testers based on responses on a quick online survey (see: Appendix 2 - Survey), which assessed:

- prior experience with or exposure to the Portuguese language,

- interest in learning Portuguese, and

- self-beliefs on their language learning capacity.

Location

The user tests were conducted at NYU MAGNET.

Duration

Each user test session lasts approximately 1 hour.

Materials

A low-fidelity prototype built on Google Slides was used during the user tests. The slides provided users with the actual content from LanguageBug: Portuguese.

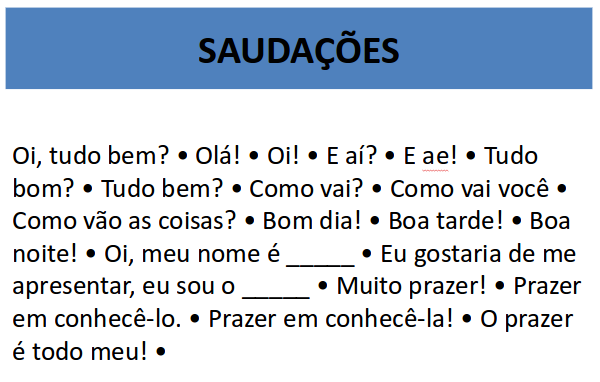

Image 23 - Screen-shot of the material used on the user tests

Script

In the user tests, I covered the following content:

- Greetings

- Adjectives

- Numbers

- Self-introduction

For each of these content items, there was a slide that contained words and expressions in Portuguese. I showed users each of these slides and performed the following actions:

Speaking

- Read aloud the first word (in Portuguese).

- Ask the user to try to mimic my speech (read in Portuguese).

- Repeat this action the following words, until the list is completed.

Translating

- Read aloud the translation of the first word (in English).

- Ask user repeat that translation after me (repeat in English).

- Repeat this action with the following words, until the list is completed.

Practicing

- Ask users to read (in Portuguese) all words by themselves.

- Ask users to translate (to English) all words by themselves.

Recording

- Let the user know I would start recording.

- Start recording.

- Repeat steps of Practicing.

- Repeat steps of Practicing, but without slides.