Individual Project Focus

Another special moment of formative feedback on my project was the Individual Project Focus workshop I conducted on April 5th. This event was also part of the thesis course schedule, therefore, my thesis instructor and some of my peers attended it.

Presenting and discussing my design challenges with them provided me with insights on how to structure my user testing.

Differentiating

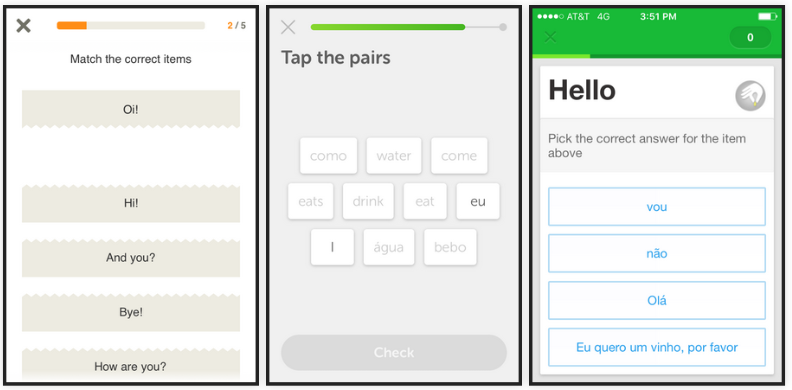

At that time, I was building a solid landscape audit of language learning mobile applications. After experimenting with and analyzing four different services I was able to show that there is a very homogeneous zeitgeist when in mobile language learning:

- most apps were based on tapping and matching mini-games,

- such mini-games rarely prompted learners to speak,

- they were not challenge or required too much focus, and

- the pace or flow of learning was very slow in all of them.

Image 17 - Most language learning apps work on very similar ways

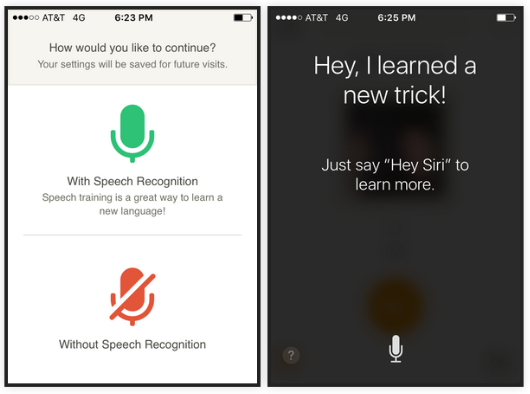

In addition to that, I was able to identify that some language learning apps were relying on speech recognition as a way to give feedback to learners. In my analysis of those speech recognition features, I noticed that:

- processing human speech takes too much time and memory,

- apps would break on a regular basis due to that, and

- the pace or flow of learning would decrease even more.

Image 18 - Speech recognition may cause errors

My questions

Since the beginning of my development process, it was evident to me that I wanted to distance LanguageBug from these specific common practices. It seemed to me that they were overly complicated and did not help learners achieve their goals.

However, Maaike Bouwmeester, my thesis instructor, made an interesting point about feedback and responsiveness. She made me realize that if I neglect both the speech recognition and the matching mini-games, it means that I was conceiving LanguageBug as an entirely non-responsive app.

In other words, LanguageBug would never be providing actual feedback, because it would never be listening or receiving any information from the learners.

Although my first thought was that this whole idea was perfectly aligned with my language learning beliefs, Maaike’s point was that the user experience would be significantly less pleasant. In fact, I was neglecting some general rules/guidelines of interactive design. According to Saffer (2009), good interactive designs are usually:

Trustworthy: “Before we’ll use a tool, we have to trust that it can do the job” (p. 60).

Clever: “intelligence without smugness or condescension. … And it also implies delight.” (p. 65).

Responsive: “We need to know that the product ‘heard’ what we told it” (p. 64).

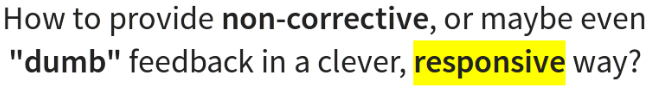

After realizing that, I was left with one important question: “Is it possible to provide non-corrective, or maybe even ‘dumb’ feedback in a clever and responsive way?”.

My presentation

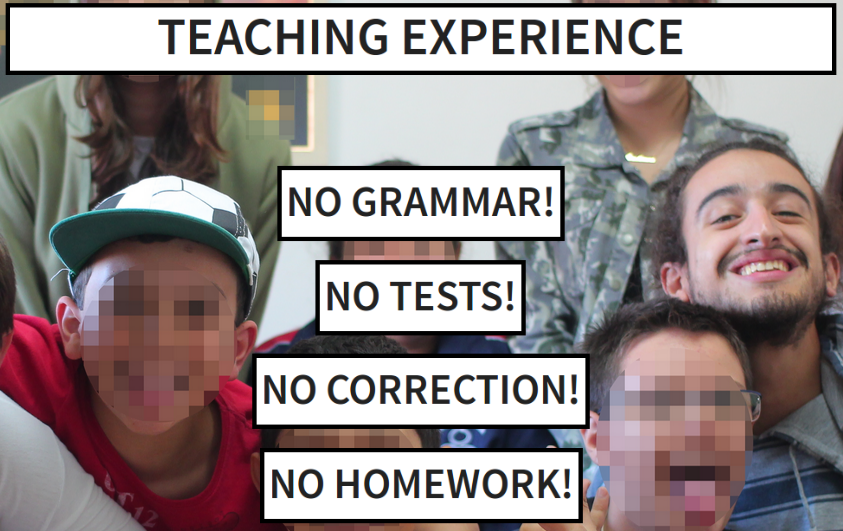

In attempting to get valuable insights on this question, I had first to explain the origin of my strong beliefs on how language learning could work best. I began by sharing my experience as a language teacher at a school in which there was not any grammar, any testing, any correction and any homework.

Image 19 - These are now my strong beliefs on language learning

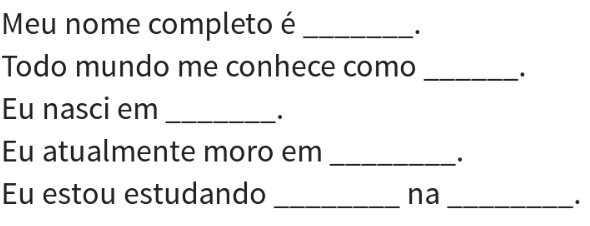

Then, since the approach used at that school was very unusual, I conducted a quick demonstration of how my classes used to happen.

Image 20 - Content covered during the brief demonstration

The way I conducted those classes is still what most guides of my design decisions. Also, having tested that particular approach with hundreds of students over many months really simplifies the process of evaluating the design of LanguageBug.

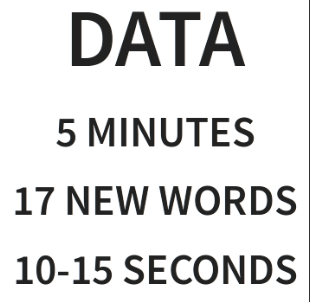

Then, I presented some quantitative data on the learning that had just occurred and collected general feedback from my peers. They seemed to have enjoyed the experience, and most of them agreed it was fairly challenging.

Image 21 - Quantitative data on the brief demonstration

The following step was to help them realize that I had not provided any actual feedback or performed any assessment. No matter how they were speaking Portuguese, I would praise their effort and encourage them to perform other actions in sequence.

After this entire contextualization, I introduced my peers to the actual design challenge and began to moderate a class discussion.

Image 22 - The design challenge I was posing

This entire presentation can be accessed on Individual Project Focus.

Feedback

Most of the initial feedback I received consisted of questions to clarify that approach or comments on the demonstration in which they have participated. My peers and instructor shared that:

- Written clues were either considered helpful or distracting,

- Longer sentences would sometimes make them feel anxious,

- The demonstration had required most of their focus, and

- It was nice to speak Portuguese at the same time other learners were also speaking.

Regarding my specific design challenge, everyone seemed to be unsure of ways to foster design responsiveness without making the learning approach corrective.

Therefore, I decided to propose a solution: self-assessment videos, which the user would record within the app and use to constantly evaluate their own performance and development.

Comments and questions gravitated around those main points:

- How would learners correct pronunciation mistakes of specific words?

- I should create a logic model around this self-recording idea.

- What assumptions am I making when I suggest this feature?

- Would that kind of video really be motivating to learners?

- Could these videos make learners feel shy and embarrassed?

- How about forcing learners to watch their latest performance before initiating another lesson practice?

All these helpful questions and comments have significantly impacted my design process. They made me realize that I had a solid suggestion for a design feature, for which I still needed a more substantial justification.

It became evident that to fill in such theoretical gaps, I could benefit from planning and performing some user testing with those questions in mind.